The Accidental Reviewer Part 2: Why Reviewing Becomes a Superpower in the Age of AI

(Photo by Towfiqu barbhuiya on Unsplash)

This is Part 2 of the series.

In Part 1, I talked about how I accidentally trained myself to review across multiple stacks starting from “minus”.

Forcing myself to leave PR comments even when I had no idea what I was looking at and eventually turning that habit into cross-domain reviewing ability.

Now in the AI era, that ability has become far more valuable than I expected.

An experiment with AI agents: building a small project from scratch

To explain why, here’s some context (again).

As mentioned in Part 1, I wrote a small tool (with the help of AI agents) to calculate how many PRs I had reviewed. After discovering the numbers, I thought:

This is useful… what if I add more features and turn it into a personal engineering dashboard?

Instead of writing everything by myself, I decided to run an experiment.

My rules:

- Use ChatGPT 5.2 Codex + VSCode only

- I can only prompt the AI to write code

- I cannot manually change more than two lines (I broke the rule once by accident, so I turned it into a rule, haha)

The results? In one day, with 28 commits, the AI and I built:

- A Streamlit dashboard showing merged/reviewed PRs across GitHub/GitLab

- A data pipeline that fetches PRs into a local SQLite database (first version was CSV!)

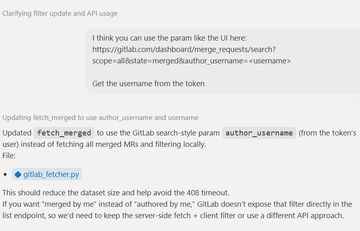

- Filtering by platform, kind (reviewed/merged) and date

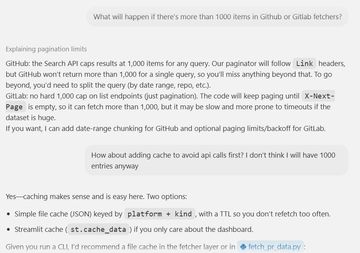

- A JSON caching layer to reduce API calls

- GitHub Actions for linting & tests

misefor environment setup,black/rufffor formatting + linting- Multiple refactors, test improvements and architecture cleanups

In total: 48 files changed, 2,670 insertions, 382 deletions.

(If you’re curious, here’s the repo: https://github.com/snowleo208/pr-data-check-tool)

It’s probably still slow and a bit buggy, for instance, I later realised the AI agent chose a synchronous approach which hits a bottleneck during large fetches. But hey, we built it in a single day with an AI agent (and I’m not even that familiar with Python!).

During the experiment, I paired with the AI like I would pair with a knowledgeable junior engineer. Asking questions, suggesting improvements, checking structure, challenging decisions and reviewing everything it wrote.

Then I realised something important: I was reviewing AI code the exact same way I review human code!

AI can write code, but… it still needs a reviewer!

At the beginning, Codex gave me huge chunks of code.

So I asked it to refactor. Then split things apart. Slow down. Explain its decisions. Rework error handling. Add tests.

After enough rounds of challenging questions, the model started producing code that matched my preferences. Cleaner, smaller functions, fewer nested branches, clear responsibilities and better error handling.

(To confirm, I even asked the AI agent to create a style guide in the middle of my experiment!)

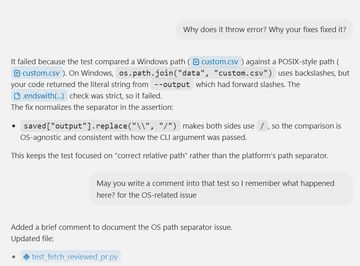

There was even a moment when a test failed and I genuinely didn’t understand why the fix worked. So I asked:

Why does this throw an error? Why does your fix work?

The AI agent explained and I asked it to add a comment into the tests.

When I asked the agent to migrate from CSV to SQLite, it changed nearly 200 lines across multiple files. I didn’t panic because I had asked AI agent to create unit tests before.

After 28 commits, I ended up with a project that I understood.

The Superpower of Reviewing

Before AI, engineering value was often associated with “how well you know a framework” or “how deep your language expertise is”.

But with AI now able to generate code at incredible speed, the skills that really make an engineer effective start to look more like:

- Choosing the right architecture

- Evaluating tradeoffs

- Spotting code smells

- Identifying hidden risks

- Ensuring maintainability

- Asking “what happens when this breaks?”

The exact skills we exercise when reviewing PRs!

Reviewing is no longer just a step that happens after coding, it’s becoming part of a continuous feedback loop while working alongside AI.

My “universal reviewing questions” work perfectly on AI too

In Part 1, I shared my list of questions for reviewing unfamiliar stacks. When used on AI-generated code, they work well too.

- How do we handle errors/edge cases here?

- Do we have error states?

- Do we have tests for this behaviour?

- Why do we need this? (And if the answer is complex) Could you add a comment explaining this so we don’t forget why? (and more)

None of them require knowing the language. That’s why these questions are a superpower that I want to share with everyone.

Final thoughts

I would never have realised how quickly I read and review code without working with AI agents.

Reviewing started as something my managers once encouraged me to do.Now, in the age of AI, it has unexpectedly become one of my most valuable engineering skills.

And the good news? It’s a skill anyone can practice every day. Just pair with AI agent or an engineer and get better together!